-

Contents

- Issues Arising from Poorly Designed AI Architecture

- Core Components of Generative AI Architecture

- Embracing New Methodologies in Generative AI Project Design

- Security and Ethical Considerations

- The Future of Generative AI - Key Architectural Trends to Watch

- Final Thoughts

- How Processica Can Help with Architecture and Development of Custom AI Solutions

Issues Arising from Poorly Designed AI Architecture

Designing the architecture of AI-based solutions is a critical step that significantly impacts their performance, scalability, and reliability. If the architecture is not properly designed, several issues can arise, undermining the effectiveness of the AI system. Here are some potential problems:

1. Performance Bottlenecks

Poorly designed AI architecture can lead to performance bottlenecks, where certain components of the system become overwhelmed by the workload. This can result in slow response times, decreased throughput, and overall inefficiency. For instance, inadequate data pipelines or suboptimal model deployment strategies can severely limit the system’s ability to process and analyze data in real time.

2. Scalability Challenges

AI solutions must be able to scale efficiently to handle increasing amounts of data and user demands. An inadequately designed architecture may lack the flexibility and scalability required to grow with the business. This can lead to system crashes, data loss, and the inability to support a growing user base or expanding datasets, ultimately hindering the AI solution’s capacity to evolve and improve over time.

3. Integration Issues

AI systems often need to integrate with various other systems, such as databases, third-party APIs, and legacy software. If the architecture does not account for seamless integration, it can result in compatibility issues, data silos, and fragmented workflows. This not only disrupts operations but also limits the AI solution’s ability to deliver comprehensive insights and functionality.

4. Security Vulnerabilities

A poorly designed AI architecture can expose the system to numerous security threats. Inadequate security measures, such as insufficient data encryption, lack of access controls, and vulnerable communication protocols, can lead to data breaches, unauthorized access, and malicious attacks. Ensuring robust security practices within the architecture is essential to protect sensitive information and maintain user trust.

5. Maintenance Difficulties

Maintaining and updating AI systems is an ongoing process that requires a well-structured architecture. If the design is overly complex or lacks modularity, it can complicate maintenance efforts. This can result in longer downtimes, higher costs, and increased difficulty in implementing updates or fixes. A clear, modular architecture facilitates easier troubleshooting, upgrades, and enhancements.

6. Bias and Ethical Concerns

Ethical considerations are paramount in AI development. An architecture that does not properly address data biases, fairness, and transparency can lead to biased outcomes and ethical dilemmas. For instance, if the data preprocessing and model training processes do not include mechanisms to detect and mitigate biases, the AI system may perpetuate or even exacerbate existing biases, leading to unfair and discriminatory outcomes.

7. Operational Inefficiencies

Operational inefficiencies can arise from an architecture that does not align with the operational needs of the business. This includes poor resource allocation, inefficient workflows, and lack of automation. These inefficiencies can increase operational costs, reduce productivity, and limit the AI system’s ability to deliver timely and actionable insights.

The architecture of AI-based solutions plays a crucial role in determining their success and effectiveness. To avoid operational pitfalls, it is essential to adopt a holistic and thoughtful approach to AI architecture design, ensuring that all aspects of performance, scalability, security, and ethics are carefully considered and integrated.

Core Components of Generative AI Architecture

The architecture of generative AI projects is built around several key components that work in concert to deliver powerful and flexible AI-driven solutions. At the heart of this architecture lies the AI model itself, which serves as the engine for content generation. The choice of model, whether it’s a pre-trained LLM like GPT or a custom-built solution, is crucial and often depends on the specific requirements of the project. Fine-tuning these models on domain-specific data can significantly enhance their performance for particular use cases.

Surrounding the AI model is a robust infrastructure designed to support its computational needs and manage data flow. This typically includes high-performance computing resources, often leveraging GPUs or TPUs for accelerated processing, and scalable storage solutions to handle the vast amounts of data required for training and inference. The infrastructure must be designed with flexibility in mind, allowing for easy scaling to accommodate growing demand and evolving model complexity.

Interfaces form another critical component, bridging the gap between the AI model and its users. This encompasses both the API layer, which allows programmatic access to the model’s capabilities, and user interfaces that provide intuitive ways for end-users to interact with the AI. These interfaces must be designed with consideration for the unique characteristics of generative AI, such as handling streaming responses or managing context in conversational applications.

Monitoring and analytics systems play a vital role in ensuring the ongoing performance and quality of generative AI systems. These components track various metrics, from model accuracy and response times to usage patterns and error rates. They provide valuable insights that inform continuous improvement efforts and help maintain the system’s reliability and effectiveness over time.

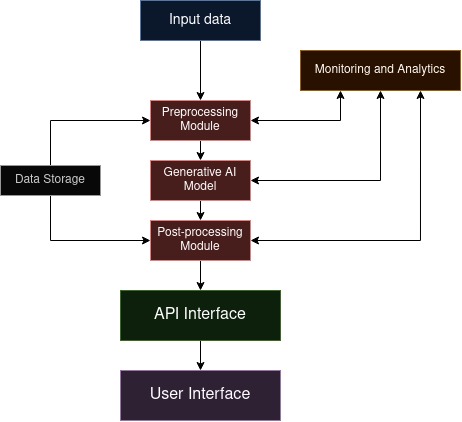

This diagram illustrates the flow of data and processes in a typical generative AI architecture. The input data is first preprocessed and then fed into the generative AI model. The model’s output undergoes post-processing before being served through an API interface to the user interface. Throughout this process, monitoring and analytics systems oversee key components, while data storage supports both preprocessing and post-processing stages.

Embracing New Methodologies in Generative AI Project Design

Designing projects with generative AI at their core requires a shift in approach from traditional software development. One of the primary considerations is data collection and preparation. Unlike conventional systems, generative AI models often require vast amounts of high-quality, diverse data for training. This data must be carefully curated, cleaned, and structured to ensure the model can learn effectively and generate accurate, relevant outputs. Designers must also consider privacy and ethical implications when collecting and using this data.

The generation process itself introduces unique challenges. Post-processing becomes crucial in refining the raw output from the AI model. This may involve filtering inappropriate content, formatting the generated text or code, or even combining multiple generations to produce the final result. Ensuring the quality and relevance of the generated content is an ongoing process that often requires human oversight and continuous refinement of the model and post-processing algorithms.

Scalability is another critical factor in designing generative AI systems. These projects often need to handle a high volume of requests, each potentially requiring significant computational resources. Strategies for efficient scaling might include distributed processing, load balancing, and intelligent request routing. Moreover, optimizing latency and throughput becomes essential, especially for real-time applications. Techniques such as model quantization, batching requests, and leveraging hardware acceleration can help improve performance.

Caching and distributed systems play a vital role in enhancing the efficiency of generative AI projects. Caching frequently requested generations or intermediate results can significantly reduce computational load and improve response times. Distributed systems architecture allows for better resource utilization and fault tolerance. However, implementing these strategies in the context of generative AI presents unique challenges, such as managing the coherence of cached data across distributed nodes and ensuring consistent performance across a distributed model.

Designers must also consider the iterative nature of generative AI development. Unlike traditional software where features are often clearly defined from the outset, generative AI projects may evolve as the model’s capabilities are better understood and refined. This necessitates a flexible architecture that can accommodate frequent updates to the model, data pipeline, and post-processing algorithms without disrupting the overall system.

Security and Ethical Considerations

Security and ethical considerations are paramount in the architecture of generative AI projects, presenting unique challenges that extend beyond traditional software development concerns. Data privacy is a critical issue, as these systems often handle sensitive information during both training and inference phases. Architects must implement robust encryption mechanisms for data in transit and at rest, ensuring that personal information is protected throughout the AI pipeline. Additionally, access control systems must be meticulously designed to prevent unauthorized use of the model or exposure of sensitive data.

The potential for bias in generative AI systems is a significant ethical concern that must be addressed at the architectural level. Bias can be introduced through training data, model design, or even the way outputs are processed and presented. Mitigating this requires a multi-faceted approach, including diverse and representative training data, regular audits of model outputs for bias, and the implementation of fairness metrics in the monitoring and analytics systems. Architects should also consider incorporating explainable AI techniques to provide transparency into the model’s decision-making process, which can help identify and address bias.

Another crucial ethical consideration is the potential misuse of generative AI technology. These powerful systems can be exploited to create misinformation, deepfakes, or other harmful content. Architectural safeguards must be put in place to prevent such misuse, which may include content filtering systems, user authentication mechanisms, and rate limiting to prevent automated abuse. Moreover, architects should consider implementing traceability features that can help attribute generated content to specific users or sessions, aiding in accountability.

The environmental impact of large-scale AI models is an emerging ethical concern that architects must address. The substantial computational resources required for training and running these models can result in significant energy consumption and carbon emissions. Sustainable architecture design principles should be employed, such as optimizing model efficiency, using renewable energy sources for data centers, and implementing smart scaling strategies to minimize unnecessary computation.

Lastly, architects must consider the broader societal implications of their generative AI systems. This includes potential impacts on employment, creative industries, and information ecosystems. Responsible architecture should incorporate mechanisms for ongoing assessment of these impacts, as well as features that complement and augment human capabilities rather than merely replacing them. This might involve designing hybrid systems that combine AI generation with human curation or oversight, ensuring that the technology serves as a tool for human empowerment rather than displacement.

The Future of Generative AI - Key Architectural Trends to Watch

The landscape of generative AI is rapidly evolving, and several emerging trends are set to shape the future of project architecture in this field. One significant trend is the move towards more efficient and specialized AI models. While current architectures often rely on large, general-purpose models, future systems may increasingly utilize smaller, domain-specific models that are more computationally efficient and easier to deploy. This shift could lead to more distributed architectures, where multiple specialized models work in tandem to generate complex outputs.

Edge computing is poised to play a more prominent role in generative AI architectures. As models become more efficient, running generative AI on edge devices becomes increasingly feasible. This trend could lead to architectures that prioritize local processing, reducing latency and addressing privacy concerns by keeping sensitive data on the user’s device. Future designs may need to accommodate hybrid systems that seamlessly integrate edge and cloud-based AI processing.

The integration of multimodal AI capabilities is another emerging trend that will impact architecture design. Future systems will likely need to handle not just text, but also images, audio, and video inputs and outputs. This shift towards multimodal AI will require more complex data pipelines, enhanced storage solutions, and sophisticated preprocessing and postprocessing modules capable of handling diverse data types.

Advancements in AI model interpretability and explainability are expected to influence future architectures significantly. As regulatory scrutiny increases and users demand more transparency, architects will need to incorporate robust systems for tracking and explaining AI decisions. This may lead to the development of new architectural layers dedicated to generating human-understandable explanations for model outputs.

The concept of AI-assisted coding and architecture design is itself a growing trend that could revolutionize how generative AI projects are built. Future systems may incorporate AI tools that can suggest architectural improvements, generate boilerplate code, or even autonomously handle certain aspects of system design. This could lead to more adaptive and self-optimizing architectures that evolve based on usage patterns and performance metrics.

Lastly, the ethical considerations surrounding AI are likely to become even more central to architectural design. Future trends point towards the development of built-in ethical frameworks and real-time bias detection systems. Architectures may need to incorporate dynamic consent mechanisms, allowing users greater control over how their data is used in AI systems. Additionally, we may see the emergence of standardized ethical assessment modules that can be integrated into AI pipelines to ensure compliance with evolving regulations and societal norms.

Final Thoughts

Architecting generative AI projects represents a paradigm shift in software development, demanding a holistic approach that goes beyond traditional design principles. The core components of these systems – from the AI model itself to the surrounding infrastructure, interfaces, and monitoring systems – form a complex ecosystem that must be carefully balanced to achieve optimal performance, scalability, and ethical compliance. As we’ve explored, designing for generative AI involves unique considerations in data handling, processing pipelines, scalability strategies, and security measures. The architecture must be flexible enough to accommodate the iterative nature of AI development while robust enough to handle the intensive computational demands and vast data flows inherent to these systems.

Looking ahead, the field of generative AI architecture is poised for continued evolution. Future trends point towards more efficient and specialized models, increased edge computing capabilities, multimodal AI integration, and enhanced explainability. These advancements will bring new challenges and opportunities for architects, necessitating ongoing adaptation and innovation.

How Processica Can Help with Architecture and Development of Custom AI Solutions

Processica stands at the forefront of generative AI development, providing comprehensive services that cater to the unique needs of each client. Our expertise spans various domains, ensuring that we can design and implement AI systems that drive innovation, enhance productivity, and promote growth. Here’s how Processica can assist in architecting and developing custom AI solutions:

Generative AI Implementation Services

At Processica, our generative AI implementation services are designed to create a robust foundation for your AI initiatives. We provide end-to-end solutions that encompass everything from initial consultation and system design to deployment and ongoing maintenance. By leveraging our expertise, businesses can ensure that their AI systems are optimized for performance, scalability, and ethical compliance.

Custom Generative AI Development

Our seasoned team of generative AI experts excels in delivering bespoke AI solutions tailored to your specific business objectives and challenges. We understand that each business is unique, and we design AI systems that enhance productivity, encourage innovation, and drive growth. Our range of services includes AI-driven data analysis and smart automation, ensuring that your generative AI initiatives lead to business excellence.

Generative AI App Development

We specialize in developing pioneering generative AI-infused applications that employ the latest technologies to provide unique and engaging user experiences. Whether it’s natural language processing, computer vision, or predictive analytics, our expertise ensures that your mobile and web applications offer personalized, efficient, and user-friendly experiences. By integrating advanced AI capabilities, we help you stand out in a competitive market.

Generative AI Chatbot Development

Our generative AI chatbot development services focus on creating intelligent, responsive, and context-aware chatbots. Utilizing the latest GPT models and other advanced technologies, we design chatbots capable of handling complex queries and providing personalized assistance. This not only enhances customer service but also improves overall customer satisfaction and loyalty, giving your business a significant edge.

Proficiency in Advanced Generative Technologies

Processica’s team is proficient in advanced AI and ML technologies, including generative models such as GANs, VAEs, and Transformer-based models. We leverage these technologies to build sophisticated AI models that generate new data, uncover hidden patterns, and facilitate data-driven decision-making. Our generative AI development services ensure that you stay ahead of the curve by harnessing the latest advancements in artificial intelligence and machine learning.

By partnering with Processica, businesses can navigate the complexities of generative AI architecture and development with confidence. Our holistic approach, combined with our deep expertise and commitment to innovation, ensures that your AI projects are not only successful but also aligned with ethical standards and business goals. Embrace the future of AI-driven innovation with Processica and unlock new possibilities for growth and efficiency in your organization.