-

Contents

- The Critical Role of AI Voice Bots in Addressing Key Challenges

- Understanding Conversational AI Bots

- Methods of Conversational Chatbots

- How do LLMs Change Things?

- Why Voice is Essential for AI Bots

- How Does It Work?

- Get Ready for the Future with Processica

The Critical Role of AI Voice Bots in Addressing Key Challenges

The need for AI voice bots is increasingly evident across various sectors due to their ability to address several critical challenges:

Customer Support Overload

AI voice bots powered by Large Language Models (LLMs) can manage the growing demand for 24/7 customer support by efficiently handling high volumes of routine inquiries. This reduces wait times and frees human agents to focus on more complex issues, thereby enhancing overall service quality.

Limited Accessibility

Conversational AI significantly improves accessibility by assisting individuals with disabilities or those lacking proficiency in written language. This bridges communication gaps and promotes inclusive access to services and information.

Inadequate User Engagement

Traditional chatbots often fail to understand nuanced queries, leading to user frustration. In contrast, LLM-powered voice bots offer more intuitive and natural interactions, resulting in higher user satisfaction and engagement.

Healthcare Mental Well-being

AI voice bots can play a vital role in mental health support by offering empathetic and interactive conversations. These bots serve as preliminary touchpoints for individuals seeking psychological guidance, especially important amid therapist shortages.

Collectively, these capabilities underscore the transformative potential of AI voice bots in enhancing customer service, accessibility, user engagement, and mental health support.

Understanding Conversational AI Bots

Conversational AI bots are sophisticated software entities designed to replicate human-like interaction through written or spoken language, generally with the objective to perform specific functions or tasks without the need for a human counterpart. These bots are developed to go beyond simple question-and-answer routines, instead of providing a more dynamic and engaging conversational experience. As Artificial Intelligence has advanced, particularly with developments in Generative AI, the capabilities of conversational bots have significantly expanded. Modern conversational bots are no longer limited to rigid scripts and can now manage more complex and nuanced discussions. With the ability to remember past interactions and adapt to conversational context, these systems showcase an improved understanding of intent and can often anticipate user requirements.

The incorporation of Conversational AI has marked a transformative shift in how businesses interact with customers. Offering an enhanced level of service that closely mimics human interaction, these bots make users feel understood and valued, fostering a more satisfying and intuitive experience. The role of Conversational AI bots extends across customer service, providing support, and enhancing numerous other functions across various industries. By enabling businesses to offer prompt, efficient, and empathetic communication, these bots are setting new standards for digital interaction in response to the expectations of a modern consumer base that increasingly values immediacy and effectiveness in communication.

AI voice bots, leveraging large language models (LLMs), are poised to revolutionize the way we interact with technology, offering immersive conversational experiences across a range of personal and professional applications.

As AI tutors, these sophisticated bots can provide personalized, interactive learning experiences, adapting their instructional style to each student’s pace and learning preferences, making education more accessible and tailored than ever before. In the realm of consulting, AI voice bots serve as ever-available consultants, capable of dispensing expert advice on diverse topics such as finance, business strategy, or legal matters, drawing from vast databases of knowledge to offer insights that were traditionally gated by human availability.

For those seeking companionship, AI companions can simulate meaningful interactions, offering conversation and comfort akin to a virtual friend, always ready to listen and respond empathically to human emotions—a feature that is especially valuable for elderly individuals or people in need of social interaction. Moreover, as proof of concept (PoC), AI psychologists are emerging, providing preliminary mental health support through empathetic dialogue and cognitive behavioral techniques, offering a judgment-free space for users to express their concerns and receive guidance, a stepping stone to professional human care.

These use cases illustrate a future where conversational AI becomes an integral part of everyday life, augmenting human communication and offering support in ways that were once the exclusive domain of human-to-human interaction.

Methods of Conversational Chatbots

Conversational AI encompasses a diverse range of chatbots, typically classified into closed-domain and open-domain bots. Closed-domain chatbots have a narrowed focus, adeptly handling specific tasks such as customer service requests or tutoring. Their primary goal is to supply precise responses within a limited context, forgoing discussions outside their expertise. Conversely, open-domain chatbots strive for versatility, emulating human-like interactions on varied topics, and relying on sophisticated deep learning to enhance the user experience by keeping conversations relevant and engaging.

Chatbots have evolved thanks to foundational techniques that predate large language models like GPT. Early chatbots often deployed reinforcement learning for self-improvement in conversations through a mix of known strategies and new pattern exploration.

Long Short-Term Memory (LSTM) networks significantly advanced chatbot conversational memory, enabling them to track context during longer interactions and overcoming the shortcomings of previous Recurrent Neural Network (RNN) models with their selective memory capabilities. Furthermore, advancements such as BERT and other methods like ELMo, Transfer Learning, Seq2Seq, and GAN have refined their language processing, making chatbots more proficient in understanding human speech ambiguities. For closed-domain bots, especially in education, development has centered around experimental setups to optimize knowledge delivery.

Machine Learning Training Techniques

Type of Method: Reinforcement Learning

Description. Learn by trial and error using rewards to shape behavior

Key Benefits. Finds balance between exploration and exploitation; ideal for context continuity

Type of Method: Supervised Learning

Description. Learn from labeled datasets, predicting output from input

Key Benefits. Accurate when high-quality, labeled data is available

Type of Method: Transfer Learning

Description.Apply knowledge from one domain to another

Key Benefits. Efficient use of pre-existing models; less data required for training

The datasets used by conversational chatbots are as varied as the methods behind their creation, encompassing a wide array of information to improve functionality and response accuracy.

Machine Learning Models

Type of Method: LSTM

Description.Improves upon the RNN by managing memory across time intervals

Key Benefits. Better at capturing long-term dependencies; handles context in conversations

Type of Method: BERT

Description.Pre-trained transformer model with deep bidirectional context

Key Benefits. Excels in understanding context and nuance in language

Type of Method: RNN

Description. Neural network that processes sequences one at a time, forwarding context

Key Benefits. Intermediate solution for sequential data processing

Type of Method: ELMO

Description. Deep contextualized word representations

Key Benefits. Handles context better than traditional embeddings

Type of Method: MDP

Description.Models decision-making with states, actions, and rewards

Key Benefits. Framework for modeling environments with clear rules and outcomes

Type of Method: GPT-3

Description.Large, autoregressive language model capable of various tasks

Key Benefits. Powerful generative capabilities, adaptable to many uses with fine-tuning

Type of Method: Seq2Seq RNN

Description.Encoder-decoder structure for transforming sequences

Key Benefits: Suitable for problems where input and output are sequences

Datasets used by conversational chatbots:

Twitter-Related Dataset

OpenSubtitles Dataset

MovieDic or Cornell Movie Dialog Corpus

Wikipedia and Book Corpus

Television Series Transcripts

SEMEVAL15

Amazon Reviews and Amazon QA

Amazon Mechanical Turk Platform

Foursquare

CoQA

Specific Application Historic Dataset

Others (e.g., course materials)

Over the last decade, chatbot development has seen a shift from simple domain-locked chatbots to chatbots based on advanced machine learning techniques such as Seq2Seq RNN, LSTM, and BERT. Researchers are actively working to improve chatbot responses, addressing issues with legacy RNN models that tend to produce generic responses like ‘I don’t know’ by utilizing the greatest likelihood objective function. Efforts to refine generative models and integrate reinforcement learning are made to enrich conversation quality and achieve consistency in dialogue context. The primary challenge highlighted is the identification and maintenance of the conversation context throughout the user interaction. Maintaining dialogue context is critical for engaging and coherent user-chatbot interactions.

How do LLMs Change Things?

The advent of Large Language Models (LLMs) like GPT has heralded a new era for conversational AI, fundamentally altering the way voice bots engage with users. These powerful models, trained on massive amounts of text, have shifted the paradigm from simple question-and-response interactions to complex, nuanced communication. LLMs revolutionize the conversational AI landscape by providing bots with a deeper understanding of language context and the subtleties of human dialogue. Unlike their rule-based predecessors, LLM-based bots are adept at interpreting a wide array of language inputs, making sense of ambiguous phrasing, and generating responses that sound strikingly human. This transformation is akin to having a conversation with an entity that understands and can predict user intent, rather than a machine blindly following a script.

At the core of LLMs lies their ability to predict the most likely next word or sequence of words in a sentence. Yet, this simple-sounding process belies the complexity behind it. LLMs, through statistical analysis and pattern recognition over vast text corpora, develop a probabilistic understanding of how language is structured and used in real-world scenarios. By leveraging this understanding, conversational AI bots can construct highly coherent and contextually relevant blocks of text, leading to more engaging and flowing conversations. However, it is critical to clarify that LLMs alone are not synonymous with full-fledged artificial intelligence. These models essentially excel at one aspect – generating text that follows from the given prompt. The conversational AI system that encompasses the LLM provides the strategic direction, ensuring conversations are purposeful and aligned with user goals. This system employs higher-level mechanisms to manage dialogue flow, incorporate business logic, enforce compliance, and make use of external data when necessary. LLM acts as the linguistic engine within this broader apparatus, bringing the sophistication of human-like articulation to the table.

The increasing complexity and capacity of Large Language Models (LLMs) like GPT-3 and GPT-4 have substantially impacted the field of conversational AI. With the addition of hundreds of billions of parameters, these LLMs have gained a much more intricate understanding of language and human dialogue. As Daniel Valdenegro from the Leverhulme Centre for Demographic Science notes, our understanding of these models’ capabilities must evolve as their technical prowess grows. There is, however, a need to remain critical of the inflated claims regarding their reasoning powers and the prospects of achieving Artificial General Intelligence (AGI) without substantial evidence. GPT-4, the latest iteration, demonstrates the concept of “polydisciplinarity,” a term that denotes the fluid integration of knowledge across various disciplines, a factor that could be crucial in the quest for super-intelligence. Additionally, GPT-4’s polydisciplinary nature enables it to engage with an extensive range of complex subjects, from ethical considerations in AI regulation to global geopolitical analysis, demonstrating a remarkable level of coherence and composure reminiscent of a well-informed, rational human expert.

In conclusion, while LLMs like GPT-4 excel at text generation, they are ultimately statistical models lacking true understanding or consciousness. Their continued development, however, pushes the boundary of what is technically possible in AI, constantly reshaping the landscape of conversational voice bots. The integration of these advanced capabilities raises profound questions and possibilities for the future of technology and humanity.

Why Voice is Essential for AI Bots

The human voice is a cornerstone of communication, and in today’s fast-paced digital environment, the integration of voice within AI bots has become an indispensable asset. AI voice bots, equipped with sophisticated voice recognition and natural language understanding, extend the familiarity and ease of everyday conversations to digital interactions.

A study on the Effectiveness of Using Voice Assistants in Learning by PhDs in Psychology from a Spanish university suggests that voice interfaces, particularly Intelligent Personal Assistants (IPAs), offer promising benefits for student access to Learning Management Systems (LMS) and satisfaction with teaching, particularly highlighted during the COVID-19 crisis in a Health Sciences university setting. Despite the complex nature and nascent development of this technology, the convenience and potential for personalized learning and collaborative work are evident.

AI bots that harness the power of voice transcend the barriers of traditional text-based interfaces, allowing users to converse as they would with another person. This natural mode of interaction is not only more intuitive but can also accelerate task completion and provide more immediate resolution to queries. For users multitasking or with accessibility needs, voice interactions are more than a convenience; they are a lever of inclusion and efficiency in their digital experience. Voice AI stands as a bridge between human conversation and digital processes. With voice, an AI bot can deliver personalized support with the nuance of human emotion and empathy, leading to more satisfying user experiences. Voice responses can adapt in tone and context, showing understanding that often surpasses the capabilities of text-based systems and faithfully reproducing the subtlety of human conversation.

As modern customers favor self-service portals for their convenience and speed, voice AI bots further enhance this preference by providing hands-free and frictionless support. Whether updating account details or processing transactional requests, voice AI bots can perform tasks with a remarkable semblance to human agents, delivering consistent, 24/7 service without the wait times associated with human-operated call centers.

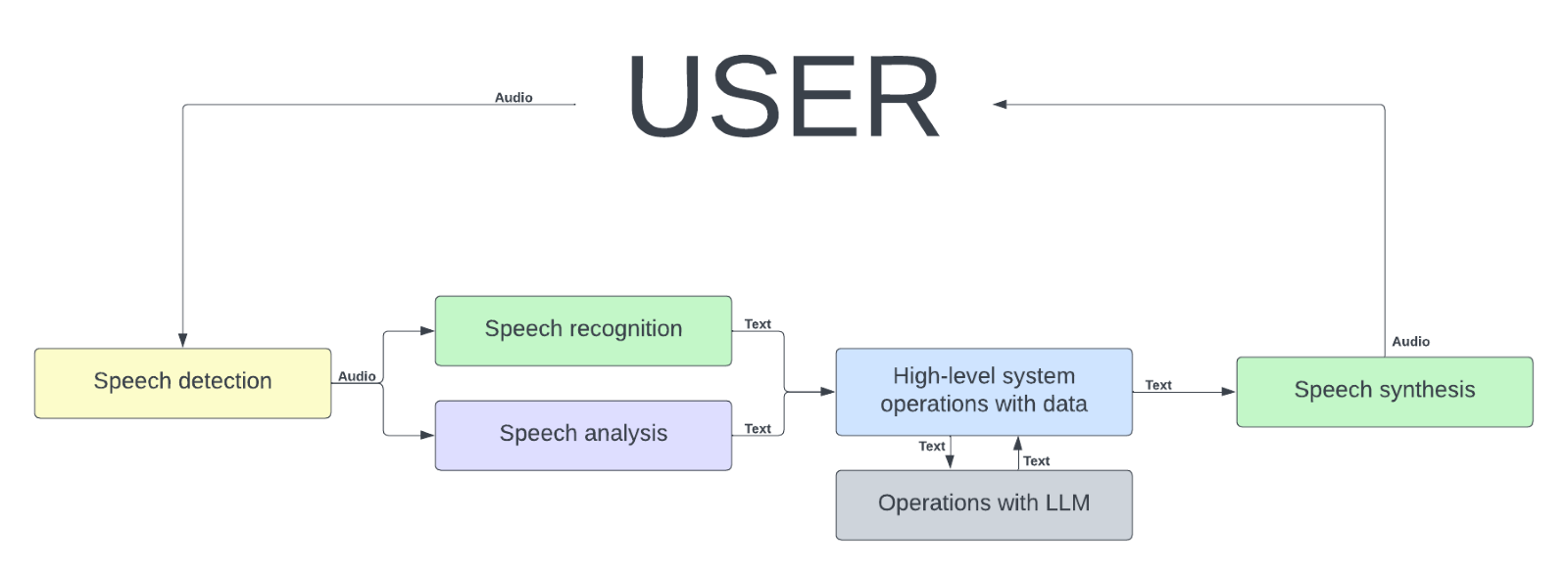

How Does It Work?

A conversational AI voice bot operates through the following steps:

1. Speech Detection.

The operation of a conversational AI voice bot begins with the user’s speech, which serves as the input for the entire interaction process. As the user begins to speak, the voice bot utilizes Speech Detection, often powered by Voice Activity Detection (VAD), to identify that the user is engaging with the system and to capture the relevant audio stream.

2. Speech Recognition.

Once the voice is detected and the audio segment isolated, the first wave of processing begins: Speech Recognition. Here, a machine learning (ML) model transcribes the spoken words into text. This process, known as Automatic Speech Recognition (ASR), is critical as it converts the nuances of human speech, such as different accents and dialects, into a textual format that the system can process.

3. Speech Analysis.

Simultaneously, or as a following step, Speech Analysis takes place. This step goes beyond transcription, as the system parses the audio for additional layers of information, like the user’s emotions or specific intonations, which can provide further context to the conversation. Emotion recognition capability allows the voice bot to respond accordingly, enhancing the interaction with a degree of empathy often lacking in traditional systems.

4. Internal Text Data Processing.

Once the user’s spoken input is transcribed into text, the system progresses to handling the query internally. This is where the bot’s core capabilities come into play. The text undergoes Natural Language Understanding (NLU) to decipher the user’s intent, factor in the conversation’s context, and handle various sub-tasks like maintaining a user profile, tracking session durations, managing historical message data for continuity, and issuing intent directives. Large Language Models (LLMs) are employed at this stage, leveraging their expertise in generating human-like text to craft an appropriate and contextually relevant response. These models understand and generate language by mapping text to high-dimensional spaces called embeddings, which represent semantic and syntactic meaning and enable the AI to grasp the subtleties and implications of the text.

5. Speech Synthesis.

Next, the system readies its response. The generated text, representing the voice bot’s intended reply, is handed off to a Text-to-Speech (TTS) system. The TTS engine performs Speech Synthesis, a process wherein the text is converted back into spoken words. This synthesized speech is designed to mimic natural human tones, ensuring the response is delivered in a manner that aligns with human communication patterns, further bridging the gap between AI and human interaction.

Transforming spoken words into text is crucial because current AI systems, including LLMs, primarily operate on text. These models use embeddings to interpret and generate language, handling semantic meanings more effectively. Text is more manageable for processing and storage within systems, allowing for better learning and personalization over time. The voice bot delivers the audio output to the user, completing the cycle of interaction. This output replicates human conversation, making the interaction as seamless and natural as possible.

The evolution of AI voice bots is marked by a set of vital features that enhance conversational experiences. Here are the key features that empower voice bots to facilitate smooth and effective interactions:

1. Active Listening

2. Personalization

3. Seamless Handover

4. Continuous Learning

5. Intent Recognition

6. Quick Responses

7. Accessibility

By integrating these features, AI voice bots become more capable, efficient, and user-friendly, enabling businesses to deliver superior customer service while also addressing the diverse needs and preferences of their users. The continuous advancements in AI and machine learning ensure that voice bots will remain at the forefront of digital interaction and customer engagement strategies.

Get Ready for the Future with Processica

Advancements in LLMs will not only refine the nuance and depth of AI-driven conversations but also unlock new avenues of personalization and accessibility. Businesses will increasingly harness these sophisticated tools, tailoring voice bots more precisely to their services and clientele while addressing the challenges of contextual relevance. With ongoing enhancements in machine learning and natural language processing, we can anticipate AI voice bots that are more intuitive, capable, and integral to our daily lives, steadily approaching the seamlessness of human interaction. The march towards AI companions that are indistinguishable from human counterparts in conversation is inexorable, promising a future where AI assists us with unprecedented efficiency, empathy, and elegance.

Processica excels in creating custom AI voice bots tailored to meet the unique needs of your business. By leveraging advanced artificial intelligence and machine learning technologies, Processica designs voice bots with an innovative dynamic prompting approach that enhances customer interactions, streamlines operations, and boosts overall efficiency. Imagine a voice bot that understands your specific industry jargon and can seamlessly handle customer queries, providing instant, accurate responses. This level of customization ensures that your business stands out in a competitive market. Explore how Processica’s innovative solutions can transform your customer service experience by contacting us for a personalized consultation today.